|

|

|

DMM and DLsite Book (.dmmb/.dlst) Image Ripper DMM and DLsite Book (.dmmb/.dlst) Image Ripper |

|

Jun 17 2020, 04:37

Jun 17 2020, 04:37

|

zxc102635

Lurker

Group: Lurkers

Posts: 1

Joined: 6-August 18

|

DLsite Viewer Default magnification is auto.

viewerrip(Manually setting zoom to 100% is no longer necessary)is too large.

|

|

|

|

|

|

|

Jun 18 2020, 00:55

Jun 18 2020, 00:55

|

Moonlight Rambler

Group: Gold Star Club

Posts: 6,538

Joined: 22-August 12

|

QUOTE(genl @ Apr 21 2020, 08:34)

Use PNGGauntlet to compress them as much as possible.

Or pngcrush. Have a wrapper script, too. (remove '-brute' for much faster times but possibly worse compression) Note that as it's currently written if pngcrush dies with a bad return code the script will not process any additional images after the one that made it fail. Yes, I know it's ugly. I wrote it at 2 AM on a Wednesday for compressing high resolution uncompressed PNG files someone uploaded. CODE #! /usr/bin/env sh

# pngcrush-replace:

# run pngcrush on an image, and if the resulting PNG image is smaller, replace

# the original with it. Tries to handle program failures & empty outputs

# gracefully (e.g., not replacing the original with an empty image).

# This version also can remove colorspace information since pngcrush with

# new-ish libpng (from the last year or so) likes to quit with failure

# on images with 'known bad sRGB profiles.' To do so, add

# '-rem allb' to the pngcrush arguments below in the script.

# usage: pngcrush-replace <list of filenames>

# Depends on the following non-POSIX programs:

# - mktemp (can replace with just a 'touch' probably)

# - dc (for calculating file sizes in megabytes (actually MiB)

# (could be replaced with 'bc' if you so desire, but 'dc' is in busybox

# and 'bc' isn't, and also I prefer RPN calculators.)

# - pngcrush (duh)

TMPFILE="$(mktemp -t 'crush_XXXXXX.png')"

cleanupfunc() # catch ^C and similar and delete temp files before exiting

{

if [ -e "$TMPFILE" ]; then

rm "$TMPFILE"

fi

exit 0

}

trap cleanupfunc HUP INT QUIT ABRT TERM

TOTAL_COUNT="$#"

CUR=1

while [ "$#" -gt 0 ]; do

# clear old temp file from last loop so 'file' command is accurate for sure

printf '' > "$TMPFILE"

echo 'Crushing '"$1"'… ('"$CUR"' of '"$TOTAL_COUNT"')'

OLDSIZE="$(wc -c < "$1")" # old size in bytes

# pngcrush -rem allb -reduce "$1" "$TMPFILE"

pngcrush -brute "$1" "$TMPFILE"

RETCODE="$?"

if [ "$RETCODE" -eq 0 ]; then

NEWSIZE="$(wc -c < "$TMPFILE")"

if [ "$NEWSIZE" -lt "$OLDSIZE" ]; then

# make sure it's a valid PNG file (not empty or something at least)

file "$TMPFILE" | grep -q 'PNG image'

if [ "$?" -eq 0 ]; then

# replace original image and print out the size difference in MiB

cat "$TMPFILE" > "$1" # don't change file permissions like cp would

echo "$(echo '2k '"$OLDSIZE"' 1048576 / p' | dc)"'MiB -> '"$(echo '2k '"$NEWSIZE"' 1048576 / p' | dc)"'MiB'

else

1>&2 echo 'pngcrush failed to make a valid result from '"$1"'.'

fi

else

1>&2 echo 'pngcrush failed to reduce the size of '"$1"'.'

1>&2 echo 'Not overwriting.'

# print size comparison in MiB

1>&2 echo '('"$(echo '2k '"$OLDSIZE"' 1048576 / p' | dc)"'MiB -> '"$(echo '2k '"$NEWSIZE"' 1048576 / p' | dc)"'MiB)'

fi

else

1>&2 echo 'Error: pngcrush exited with status: '"$RETCODE"

1>&2 echo 'While processing file: '"$1"

1>&2 echo 'Cleaning up and exiting without replacing the original.'

cleanupfunc

fi

shift 1

CUR="$(expr "$CUR" '+' '1')"

done

# remove trap for ^C and other signals that would kill the program

trap - HUP INT QUIT ABRT TERM

# run cleanup (delete temp files)

cleanupfunc Also, have a patch to pngcrush's sources that make it work despite modern libpng "iCCP: known incorrect sRGB profile" warnings (Photoshop sometimes likes to make bad PNG's like this). CODE diff --git a/Makefile b/Makefile

index 79e12a9..e1400a5 100644

--- a/Makefile

+++ b/Makefile

@@ -23,7 +23,7 @@ RM = rm -f

CPPFLAGS = -I $(PNGINC)

-CFLAGS = -g -O3 -fomit-frame-pointer -Wall

+CFLAGS = -g -O3 -fomit-frame-pointer -Wall -DPNG_IGNORE_SRGB_ICCP_HACK=1

# [note that -Wall is a gcc-specific compilation flag ("all warnings on")]

LDFLAGS =

O = .o

diff --git a/pngcrush.c b/pngcrush.c

index d0c387e..e449788 100644

--- a/pngcrush.c

+++ b/pngcrush.c

@@ -5527,6 +5527,12 @@ int main(int argc, char *argv[])

}

#endif

+#ifdef PNG_IGNORE_SRGB_ICCP_HACK

+ /* hack */

+ png_set_option(read_ptr, PNG_SKIP_sRGB_CHECK_PROFILE, PNG_OPTION_ON);

+ png_set_option(write_ptr, PNG_SKIP_sRGB_CHECK_PROFILE, PNG_OPTION_ON);

+#endif

+

#ifndef PNGCRUSH_CHECK_ADLER32

# ifdef PNG_IGNORE_ADLER32

if (last_trial == 0) patch with `patch -p1 < patch-filename.patch` QUOTE(genl @ Apr 21 2020, 08:34)

In case with DMM, DMMB/DMME contain unscrambled images of original quality, while browser viewer version contain less quality because of the scrambling. This means if you screen capture pages from DMMB/DMME at the right zoom/resolution, you'll end up with 100% quality images. Better quality when compared to scrambled images in browser viewer. Albeit you'll still have to choose between less quality JPEG and oversized PNG.

If the original files were not lossless, yes, the PNG is larger. If the original files are lossless, PNG should not be significantly larger (if at all). QUOTE(genl @ Apr 21 2020, 08:34)

DMM used to provide actual 100% quality through the browser viewer before they started to use scrambling (few years ago).

That's a shame. This post has been edited by dragontamer8740: Jun 18 2020, 01:32 |

|

|

|

|

|

|

Jun 19 2020, 17:35

Jun 19 2020, 17:35

|

ilwaz

Group: Gold Star Club

Posts: 168

Joined: 3-January 12

|

QUOTE(dragontamer8740 @ Jun 17 2020, 18:55)

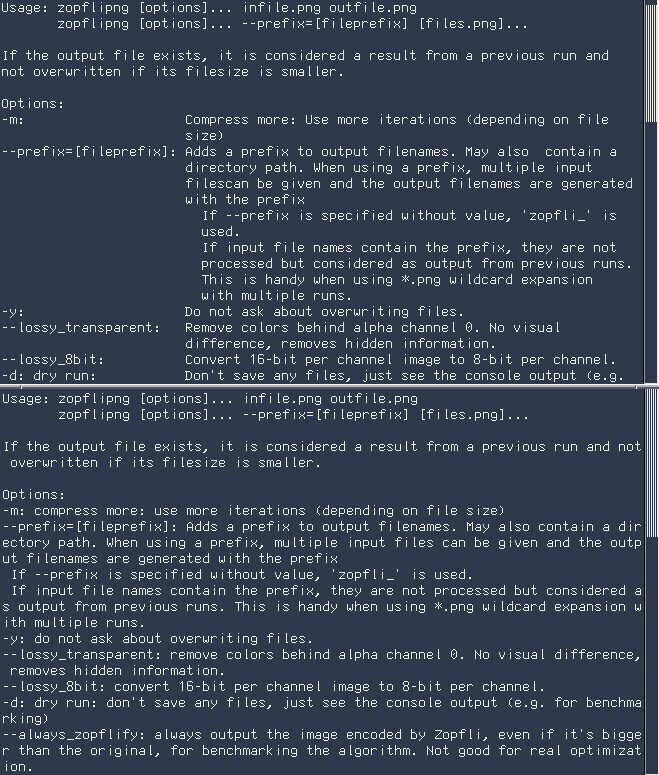

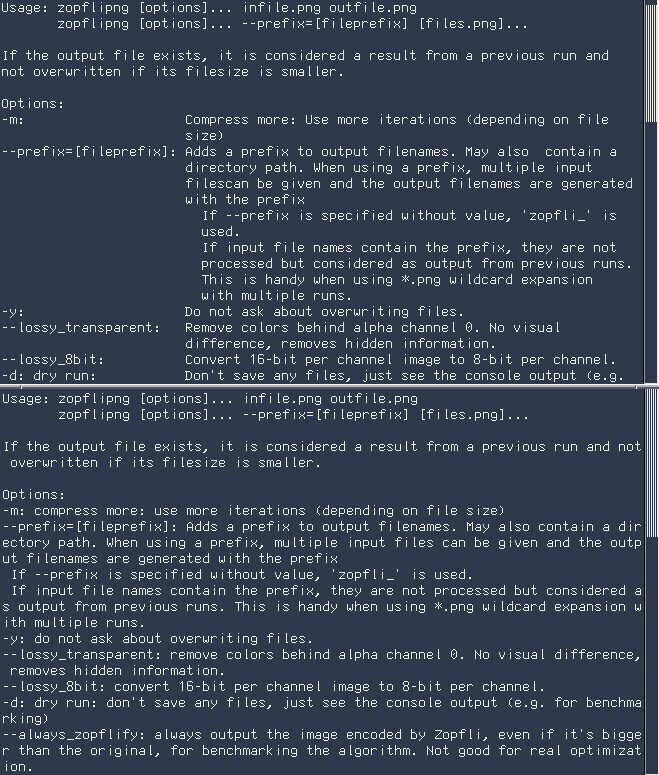

Or pngcrush.

Use zopflipng with "-m" instead of pngcrush with "-brute", it compresses pngs more, and is faster to boot. Souce is my thread on lossless compressionThis post has been edited by ilwaz: Jun 19 2020, 17:35 |

|

|

|

|

|

|

Jun 19 2020, 23:49

Jun 19 2020, 23:49

|

Moonlight Rambler

Group: Gold Star Club

Posts: 6,538

Joined: 22-August 12

|

QUOTE(ilwaz @ Jun 19 2020, 11:35)  Use zopflipng with "-m" instead of pngcrush with "-brute", it compresses pngs more, and is faster to boot. Souce is my thread on lossless compressionFour year old post is four years old (spreadsheet's from 2016). But I'll still make a wrapper for it as well. It appears to be a bit better. Still, that feeling when a program doesn't give a shit about 80 column line breaking conventions for its help text and also doesn't break on words. I tinkered with it a little, it's not perfect but far more legible for me now. Also, it did compress this png smaller than pngcrush did, so it has that going for it.  This post has been edited by dragontamer8740: Jun 20 2020, 00:28 This post has been edited by dragontamer8740: Jun 20 2020, 00:28 |

|

|

|

|

|

|

Jun 20 2020, 02:30

Jun 20 2020, 02:30

|

ilwaz

Group: Gold Star Club

Posts: 168

Joined: 3-January 12

|

QUOTE(dragontamer8740 @ Jun 19 2020, 17:49)

Four year old post is four years old (spreadsheet's from 2016). But I'll still make a wrapper for it as well. It appears to be a bit better.

It's old, but not yet outdated. There hasn't been much development in png optimizers. I am actually updating the data though, there have been some cool new formats for lossless images. QUOTE(dragontamer8740 @ Jun 19 2020, 17:49)  Still, that feeling when a program doesn't give a shit about 80 column line breaking conventions for its help text and also doesn't break on words. I tinkered with it a little, it's not perfect but far more legible for me now. Also, it did compress this png smaller than pngcrush did, so it has that going for it.  You might benefit from running multiple processes in parallel, if you're working on your script. Newer image format encoders are multithreaded but png optimizers generally are not. |

|

|

|

|

|

|

Jun 20 2020, 03:39

Jun 20 2020, 03:39

|

Moonlight Rambler

Group: Gold Star Club

Posts: 6,538

Joined: 22-August 12

|

QUOTE(ilwaz @ Jun 19 2020, 20:30)

You might benefit from running multiple processes in parallel, if you're working on your script. Newer image format encoders are multithreaded but png optimizers generally are not.

My computers aren't very heavily threaded either (IMG:[ invalid] style_emoticons/default/smile.gif) One's got two cores and the other four. I do most of this stuff on the two core one because it's more portable (it's an old laptop). That said, maybe. Bourne shells start to show deficiencies when you start threading stuff though. Edit: zopflipng took 14 minutes on a 4000x4000 image on my two core 2.13GHz machine and ~12 on my four core 3GHz one (which I started a few minutes after the laptop). Yeesh. Shaved 2.5MB or so off the originally 17MB image though. I have another version of the script which just runs them through convert with default parameters (useful for uncompressed PNG's). Much faster than that. This post has been edited by dragontamer8740: Jun 20 2020, 04:48 |

|

|

|

|

|

|

Jun 20 2020, 20:55

Jun 20 2020, 20:55

|

xTtotal

Lurker

Group: Recruits

Posts: 4

Joined: 24-February 12

|

no idea whether or not people in here are aware but at DMM there seems to be a big difference between Doujin and book DRM. in the browser you can download all the JPGs of "DRM'ed" Dojins which have no scrambling and seem full size, with a little bit of manual work opening and saving them from the network subtab (tested recently using firefox on linux) which is just plain great. did it with this one. [ www.dmm.co.jp] https://www.dmm.co.jp/dc/doujin/-/detail/=/cid=d_173243/no need to potentially sacrifice quality or whatever by scraping canvases when the file is just lying around on the server (provided you bring cookies) QUOTE(城夜未央 @ Mar 21 2020, 08:49)  Hi, I uploaded an old dlsite viewer, and remember to close the update prompt window. (IMG:[ invalid] style_emoticons/default/smile.gif) Download: [ www.mediafire.com] http://www.mediafire.com/file/zehr14zvazic...erPack.exe/filelivesaver. I was always afraid to buy DRM'ed stuff because well the items could just go away either because DLsite/DMM or the artist removes them or go out of business or for reasons that are entirely not the fault of DLSite/DMM, and I wouldnt want that. This post has been edited by xTtotal: Jun 20 2020, 21:03 |

|

|

|

|

|

|

Jul 12 2020, 06:20

Jul 12 2020, 06:20

|

xTtotal

Lurker

Group: Recruits

Posts: 4

Joined: 24-February 12

|

QUOTE(Pillowgirl @ May 14 2018, 14:04)  QUOTE(sureok1 @ May 14 2018, 04:40)

Has anyone developed a method of ripping .dmme files that doesn't involve giving out your credentials to someone else over the internet?

Yes. details please? |

|

|

|

Aug 23 2020, 03:49

Aug 23 2020, 03:49

|

joey86

Newcomer

Group: Recruits

Posts: 13

Joined: 20-July 10

|

Is there any solution for EPUB files from DMM guys?

I can easily rip anything from dmmb format, but it I doesn't work with Epub ������

This post has been edited by joey86: Aug 23 2020, 03:50

|

|

|

|

|

|

|

Oct 4 2020, 02:48

Oct 4 2020, 02:48

|

spyps

Lurker

Group: Lurkers

Posts: 1

Joined: 30-November 13

|

QUOTE(Nalien @ Mar 21 2020, 05:11)  Here is an updated version of my guide to save images from DMM's browser viewer, which is useful for .dmme files with which viewerrip doesn't work. Start Chrome with the --disable-web-security flag and with a different user. In Windows you can do this by pressing Win+R and executing CODE "C:\Program Files (x86)\Google\Chrome\Application\chrome.exe" --disable-web-security --user-data-dir=C:\chromeuser https://book.dmm.com/library/?age_limit=all&expired=1 Set your desired download folder in Chrome's settings. Find the work you want to save in your library, open the DevTools with F12 or Ctrl+Shift+I and select the Network tab (if you don't do this beforehand it may ask you to refresh the page). Start reading. Click one of the 0.jpeg in the DevTools (you can click Img near the top to filter the images) to show the original image dimensions below the image preview. With the DevTools still focused, press Ctrl+Shift+M to enable device mode. Open the device mode advanced options by clicking the vertical ellipsis in the top right and click Add device pixel ratio. Click DPR at the top and set it to 1. Set the size of the viewport to that of the original images by changing the values of the numerical inputs at the top center. This will make the images you download of the same dimensions as the originals. Open the Console tab of the DevTools, copy paste the following code to download the images as jpeg and press enter. CODE filename = 1

a = document.createElement('a')

function downloadCanvas() {

a.href = document.querySelector('.currentScreen > canvas').toDataURL('image/jpeg')

a.download = filename++

a.click()

}

downloadCanvas() Or this to download the images as webp, which have smaller file size. CODE filename = 1

a = document.createElement('a')

function downloadCanvas() {

a.href = document.querySelector('.currentScreen > canvas').toDataURL('image/webp')

a.download = filename++

a.click()

}

downloadCanvas() Set the viewport height again if it has been decreased by the newly appeared download bar. Go to the next image and execute CODE downloadCanvas() in the console and repeat. When there is a two-page spread, temporarily double the viewport width. You can focus the DevTools with F6, but if you just changed the viewport width you have to click the console to focus it again. To save the images quickly I suggest placing the cursor on the left side of the viewport and repeating the following actions: Click F6 Up Enter You may want to enable mouse keys to click with Numpad 5. When you save the wrong image, delete it and execute CODE --filename in the console to decrement the next filename by one. I tried this method with [ www.dlsite.com] https://www.dlsite.com/books/work/=/product_id/BJ255317.html, but it didn't work. I got error message "Cannot read property 'toDataURL' of null"I |

|

|

|

|

|

|

Oct 11 2020, 15:59

Oct 11 2020, 15:59

|

Nalien

Lurker

Group: Recruits

Posts: 9

Joined: 22-April 11

|

QUOTE(spyps @ Oct 4 2020, 02:48)  I tried this method with [ www.dlsite.com] https://www.dlsite.com/books/work/=/product_id/BJ255317.html, but it didn't work. I got error message "Cannot read property 'toDataURL' of null"I My guide was for DMM. I checked the DLSite browser viewer. There are 3 canvases for the current, previous and next pages, and the pages can be downloaded even while they aren't visible (unlike DMM). So you should execute CODE filename = 1

a = document.createElement('a')

function downloadCanvas() {

canvases = document.querySelectorAll('canvas')

for (canvas of [canvases[1], canvases[0], canvases[2]].filter(canvas => canvas)) {

a.href = canvas.toDataURL('image/jpeg')

a.download = filename++

a.click()

}

}

downloadCanvas() after moving 1 page forward once, then CODE downloadCanvas() every 3 pages. You will probably have to manually fix the numbers of the last pages if their count isn't a multiple of 3. The device-mode trick isn't necessary here since canvases are drawn at a fixed size at any viewport dimensions, and later adapted to the viewport. But they have slightly smaller dimensions and bigger aspect ratio then the original images for some reason. On the manga I'm testing it the canvas is 740x1036 and the original images 768x1152. I wouldn't know how to make them exact. But can't you rip DLsite images from the desktop application? That would be faster and accurate. As for the quality: I don't notice any visible difference if I try to convert some original pngs anyway, so I don't mind downloading lighter images and save disk space. In fact, I save them as webp to save even more space (and there's no reason to use jpg, an encoding from 1992, other than compatibility with Internet Explorer and previous Safari versions). Though if you save them as webp you may want to try increasing the quality with the second argument of toDataURL: CODE toDataURL('image/webp', .85) The defaults are .92 for jpeg and .8 webp. Avoiding multiple compressions would be nice, but it's not worth manually screenshotting every page. And it's not like DMM versions have perfect quality to begin with. I often find higher resolution versions on hitomi.la, not to mention the Fakku versions which look like way better. But I can see how you'd want the best possibile quality if you plan to share them. As for png compression: I recommend pngquant. As for DRM-free DMM doujin: I don't get it, why don't you download the zip archives directly? As for EPUB/dmmr files: I would also like to know. My image viewer guide was simply an adaption of [ pastebin.com] https://pastebin.com/GNYZDAUy, but I haven't found anything for dmmr. For now I just saved every page of the ones I had bought as images which was painful, and I will see if an OCR application like tesseract can extract the text decently, but I don't think it works with furigana (IMG:[ invalid] style_emoticons/default/sad.gif). For DMM/R18.com videos, I recommend [ github.com] hlsdl. This post has been edited by Nalien: Oct 12 2020, 09:22 |

|

|

|

|

|

|

Nov 5 2020, 02:43

Nov 5 2020, 02:43

|

himuratakeshi

Lurker

Group: Lurkers

Posts: 1

Joined: 5-November 20

|

Guys, im new here , i want to know if is possible to descensor a doujin with full censorship in white, , i have bought 2 work comic mujin, the file is dmmb, thks in advance.

|

|

|

|

Nov 11 2020, 06:48

Nov 11 2020, 06:48

|

草头将

Lurker

Group: Gold Star Club

Posts: 6

Joined: 15-February 14

|

Hello everyone, I get "Could not find a running supported viewer" when trying to rip contents from .dmmb file. I'm using DMM books 3.1.9 on Windows 10 x64. Any idea?

Appreciate your reply in advance.

Edit: nevermind, old version of DMM Reader still works.

This post has been edited by 草头将: Nov 11 2020, 07:13

|

|

|

|

Nov 15 2020, 16:11

Nov 15 2020, 16:11

|

ranfan

Group: Members

Posts: 636

Joined: 8-January 12

|

interesting technique. thanks!

This post has been edited by ranfan: Nov 15 2020, 22:53

|

|

|

|

Dec 2 2020, 10:05

Dec 2 2020, 10:05

|

b51de

Newcomer

Group: Members

Posts: 49

Joined: 18-May 10

|

Happy to say that this still worked with the outdated dmmviewer version, but the first page is all wonky/moired and a second blank page was generated somehow.

I could probably piece together a couple screenshots for it though if it's just the first page or two messing up.

This post has been edited by blind51de: Dec 3 2020, 05:36

|

|

|

|

Dec 20 2020, 23:25

Dec 20 2020, 23:25

|

okawabi

Newcomer

Group: Recruits

Posts: 11

Joined: 4-October 14

|

Thanks 城夜未央 for the old DLSITE viewer, does anyone have a the old DMMVIEWER by chance?

|

|

|

|

1 User(s) are reading this topic (1 Guests and 0 Anonymous Users)

0 Members:

|

|

|

|

|